All the Privacy Scandals in One Place

When you're thinking through a privacy issue, it's helpful to look at the relevant precedent. But, it's not so easy to find—some of it is in FTC consent decrees, some of it is in class action lawsuits, and some of it is in press stories that, for one reason or another, never turned into litigation.

Privacy precedent isn't so easy to find—some of it is in FTC consent decrees, some of it is in class action lawsuits, and some of it is in press stories that, for one reason or another, never turned into litigation.

To make it easier, I've compiled a list of privacy scandals. I've also categorized them based on what I think is the primary reason the issue turned into a scandal (more on this below).

Intentional Disclosure (Privacy)

To Other Users

- Superhuman disclosed email recipients' location to email senders.

- Hipchat proposed giving chat content to employers.

- Google Buzz disclosed users' most-emailed contacts by disclosing part of their GMail friend graph (i.e., who they emailed most frequently as "followers" or "followed-by") in users' public profiles).

- Google Buzz posts were public by default and indexed for Google Search.

- Facebook changed, in December 2009, people's profile pictures, friend list, and "liked" pages to be publicly-available by default.

- Triplebyte announced plans to make its users' resumes publicly available (it then retracted those plans).

- Venmo disclosed financial transactions that users specified as private.

To Other Companies

- IBM's Weather Channel App collected and disclosed users' location for ad targeting.

- Facebook disclosed users' profile information to the third-party apps your friends installed (see original FTC complaint and Cambridge Analytica one).

- Myspace disclosed users' FriendIDs, and therefore profiles, to advertisers (FTC complaint).

- Facebook disclosed users' Facebook IDs to advertisers when those users clicked on their ads.

- GMail extensions can scan the contents of your email.

- Avast: Motherboard released a report in January 2020 about Avast’s practice of collecting users’ browsing data and selling it to third-party advertisers. This data was de-identified and aggregated, and users had the ability to opt out (in fact, new users who’d signed up after July 2019 were given an opt-in). Regardless, Motherboard, PCMag, and other news outlets still considered this practice invasive. As a result of this press cycle, Avast shut down this data collection altogether.

To Your Employees

- Evernote proposed letting employees review users' content to improve its AI algorithm.

- Smart speakers (Alexa, Apple Siri, etc.) let employees and contractors review voice commands to improve its AI algorithms (Bloomberg article here).

- An Uber executive used its rider-tracking technology, called "God View," to track the location of journalists.

To Law Enforcement

- Ring and Nest disclosed camera locations to law enforcement.

Inadvertent Disclosure (Abuse)

- Snapchat users could easily circumvent the "disappearing" photos people sent, either by connecting their phone to a PC, taking a screenshot and pressing the Home button repeatedly, or using a third-party tool.

- Snapchat didn't verify users' phone numbers on sign-up, allowing users to impersonate other users by claiming their phone numbers. This led to the unintentional disclosure of embarrassing photos.

- Behavioral ad targeting can reveal sensitive information, like a health condition, on shared devices.

Surveillance and Collection

- Sears collected everything users were doing in a web browser, including the information they typed into form fields.

- Facebook's market research app collected everything people were doing on their phones.

- Snapchat, among other companies, collected users' location in contravention of its privacy policy (this happens a lot).

- Snapchat collected every one of a user's contacts when the user employed the Find my Friends feature.

- Google inadvertently collected content transmitted via Wi-Fi routers as part of its Street View program (Korea, Wired, security blog).

- An NBA app inadvertently recorded audio from its users.

- Twitter used users' 2FA phone numbers to target ads.

- Verizon: In December 2021, The Verge published an article describing Verizon’s collection of users’ visited websites and apps on an opt-out basis. The article found this practice “unnerving” even though Verizon made clear that it didn’t share this information with advertisers and would “use it only for Verizon purposes.” As a result, Verizon emailed its customers to tell them that they could opt out of this browsing data collection. (The Verge was still unimpressed: “Verizon says you have a choice – you can turn it off anytime because your privacy is important to it (though not important enough to make the program opt-in instead of opt-out).”)

- Wacom: In February 2020, a software engineer published a blog post observing that Wacom’s drawing tablets were collecting and recording every app their users opened on an opt-out basis. The story gained traction on HackerNews, and media outlets like The Verge covered it. In response, Wacom stated that the app data was both anonymized and de-identified, but again, The Verge wasn’t fully satisfied: “Though there is an option to opt out . . . it ought to be the other way around. An apology and clarification should be just the start; users should be opted out as the default setting, with the option to opt in.”

Secondary Use

- GMail scanned emails to serve relevant ads. While GMail users ostensibly consented to the practice, email senders didn't and sued (Law Review Article here).

Some Additional Thoughts

Most Privacy Scandals Are Driven by Disclosure.

While much is written about "surveillance" capitalism and being tracked across the web, people are primarily concerned with the disclosure of private information. Even those critics of behavioral advertising key in on the risks of disclosure (see Boyden "[T]he harms described [in content scanning] tend to be actual or potential disclosure harms").

As a result, when an issue involved more than one practice (like surveillance or secondary use), if it also involved disclosure, then I classified it as a disclosure issue.

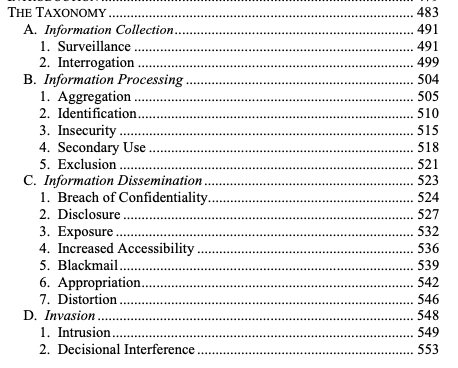

I Don't Find Daniel Solove's Taxonomy of Privacy Useful in Counseling Privacy Issues.

Perhaps if there was more available precedent, like 100s of scandals instead of 10s of them, it would be useful to make finer distinctions.

Distinguishing Privacy vs. Security vs. Abuse.

- Privacy issues are ones that result from the intended, ordinary use of the product.

- Abuse issues are ones that result from the abuse or malevolent use of the product.

- Security issues are unintended disclosures of information.

These distinctions may be useful if you're trying to figure out which team is responsible for spotting and managing which issues.

Who's Responsible for Counseling Algorithmic Bias?

I honestly don't know because I haven't had to deal with that issue before. However, if your algorithms are making important decisions about people (like offering them a loan), someone should be responsible for reviewing it for mistakes.